AudioBufferSourceNode

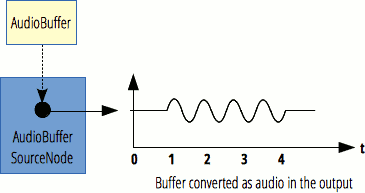

The AudioBufferSourceNode interface is an AudioScheduledSourceNode which represents an audio source consisting of in-memory audio data, stored in an AudioBuffer.

This interface is especially useful for playing back audio which has particularly stringent timing accuracy requirements, such as for sounds that must match a specific rhythm and can be kept in memory rather than being played from disk or the network. To play sounds which require accurate timing but must be streamed from the network or played from disk, use a AudioWorkletNode to implement its playback.

An AudioBufferSourceNode has no inputs and exactly one output, which has the same number of channels as the AudioBuffer indicated by its buffer property. If there's no buffer set—that is, if buffer is null—the output contains a single channel of silence (every sample is 0).

An AudioBufferSourceNode can only be played once; after each call to start(), you have to create a new node if you want to play the same sound again. Fortunately, these nodes are very inexpensive to create, and the actual AudioBuffers can be reused for multiple plays of the sound. Indeed, you can use these nodes in a "fire and forget" manner: create the node, call start() to begin playing the sound, and don't even bother to hold a reference to it. It will automatically be garbage-collected at an appropriate time, which won't be until sometime after the sound has finished playing.

Multiple calls to stop() are allowed. The most recent call replaces the previous one, if the AudioBufferSourceNode has not already reached the end of the buffer.

| Number of inputs | 0 |

|---|---|

| Number of outputs | 1 |

| Channel count | defined by the associated AudioBuffer |

Constructor

AudioBufferSourceNode()-

Creates and returns a new

AudioBufferSourceNodeobject. As an alternative, you can use theBaseAudioContext.createBufferSource()factory method; see Creating an AudioNode.

Instance properties

Inherits properties from its parent, AudioScheduledSourceNode.

AudioBufferSourceNode.buffer-

An

AudioBufferthat defines the audio asset to be played, or when set to the valuenull, defines a single channel of silence (in which every sample is 0.0). AudioBufferSourceNode.detune-

A k-rate

AudioParamrepresenting detuning of playback in cents. This value is compounded withplaybackRateto determine the speed at which the sound is played. Its default value is0(meaning no detuning), and its nominal range is -∞ to ∞. AudioBufferSourceNode.loop-

A Boolean attribute indicating if the audio asset must be replayed when the end of the

AudioBufferis reached. Its default value isfalse. AudioBufferSourceNode.loopStartOptional-

A floating-point value indicating the time, in seconds, at which playback of the

AudioBuffermust begin whenloopistrue. Its default value is0(meaning that at the beginning of each loop, playback begins at the start of the audio buffer). AudioBufferSourceNode.loopEndOptional-

A floating-point number indicating the time, in seconds, at which playback of the

AudioBufferstops and loops back to the time indicated byloopStart, ifloopistrue. The default value is0. AudioBufferSourceNode.playbackRate-

A k-rate

AudioParamthat defines the speed factor at which the audio asset will be played, where a value of 1.0 is the sound's natural sampling rate. Since no pitch correction is applied on the output, this can be used to change the pitch of the sample. This value is compounded withdetuneto determine the final playback rate.

Instance methods

Inherits methods from its parent, AudioScheduledSourceNode, and overrides the following method:.

start()-

Schedules playback of the audio data contained in the buffer, or begins playback immediately. Additionally allows the start offset and play duration to be set.

Event handlers

Inherits event handlers from its parent, AudioScheduledSourceNode.

Examples

In this example, we create a two-second buffer, fill it with white noise, and then play it using an AudioBufferSourceNode. The comments should clearly explain what is going on.

Note: You can also run the code live, or view the source.

js

const audioCtx = new (window.AudioContext || window.webkitAudioContext)();

// Create an empty three-second stereo buffer at the sample rate of the AudioContext

const myArrayBuffer = audioCtx.createBuffer(

2,

audioCtx.sampleRate * 3,

audioCtx.sampleRate

);

// Fill the buffer with white noise;

//just random values between -1.0 and 1.0

for (let channel = 0; channel < myArrayBuffer.numberOfChannels; channel++) {

// This gives us the actual ArrayBuffer that contains the data

const nowBuffering = myArrayBuffer.getChannelData(channel);

for (let i = 0; i < myArrayBuffer.length; i++) {

// Math.random() is in [0; 1.0]

// audio needs to be in [-1.0; 1.0]

nowBuffering[i] = Math.random() * 2 - 1;

}

}

// Get an AudioBufferSourceNode.

// This is the AudioNode to use when we want to play an AudioBuffer

const source = audioCtx.createBufferSource();

// set the buffer in the AudioBufferSourceNode

source.buffer = myArrayBuffer;

// connect the AudioBufferSourceNode to the

// destination so we can hear the sound

source.connect(audioCtx.destination);

// start the source playing

source.start();

Note: For a decodeAudioData() example, see the AudioContext.decodeAudioData() page.

Specifications

| Specification |

|---|

| Web Audio API # AudioBufferSourceNode |

Browser compatibility

BCD tables only load in the browser